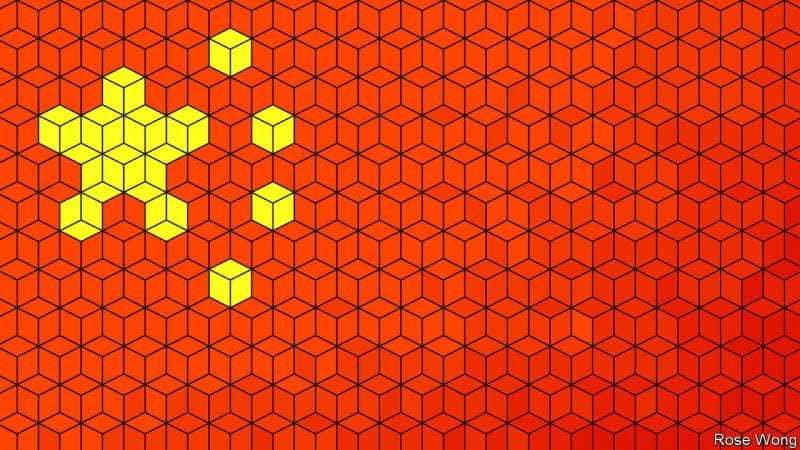

Shannon's excerpt from the article: "💯🏆Economist [excerpt]: In 2000 Bill Clinton, then America’s president, likened #China’s attempt to control the #internet to 'trying to nail Jell-O to the wall'. Today the jello seems firmly in place. Western internet services, from #Facebook and #Google search to #Netflix are unavailable to most Chinese (apart from those willing to run the risk of using illegal 'virtual private networks'). On local platforms, any undesirable content is deleted, either pre-emptively by the platforms themselves, using #algorithms and armies of moderators, or afterwards, as soon as it is spotted by government #censors. A #tech crackdown in 2020 brought China’s powerful tech giants, such #Tencent and #Alibaba, to heel—and closer to the government, which has been taking small stakes in the firms, and a big interest in their day-to-day operations.

The result is a #digitaleconomy that is sanitised but nevertheless thriving. Tencent’s super-app, #WeChat, which combines #messaging, #socialmedia, #ecommerce and #payments, alone generates hundreds of billions of dollars in yearly transactions. Mr Xi now hopes to pull off a similar balancing act with #ai. Once again, some foreign experts predict a jello situation. And once again, the Communist Party is building tools to prove them wrong.

The party’s efforts begin with the world’s toughest rules for Chinese equivalents of #Chatgpt (which is, predictably, banned in China) and other consumer-facing '#generative' ai. Since March companies have had to register with officials any algorithms that make recommendations or can #influence people’s decisions. In practice, that means basically any such #software aimed at #consumers. In July the government issued rules requiring all ai-generated content to 'uphold socialist values'—in other words, no bawdy songs, anti-party slogans or, heaven forbid, poking fun at Mr Xi. In September it published a list of 110 registered services. Only their developers and the government know all the ins and outs of the registration process and the precise criteria involved.

In October a standards committee for national information security published a list of safety guidelines requiring detailed self-assessment of the data used to train generative-ai models. One rule requires the manual testing of at least 4,000 subsets of the total training data; at least 96% of these must qualify as 'acceptable', according to a list of 31 vaguely worded safety risks. The first criterion for unacceptable content is anything that 'incites subversion of state power or the overthrowing of the socialist system'."

#news #business #artificialintelligence #2024trends #technology #censorship